Kubernetes (also known as K8s) is an open-source system for automating the deployment, scaling and management of containerized applications.

It provides 3 benefits:

Automated operations: K8s has built-in commands that allow for operations automation;

infrastructure abstraction: as K8s handles all the computing, networking and storage on behalf of the workloads, it will let developers focus on applications;

Service health monitoring: K8s will always run health checks on your services and automatically restart any service if it fails or stalls.

K8s is not Docker. Docker is a containerization platform, it works by virtualization of the Operation System (OS) which will allow a developer to select a desirable OS, configure the environment and add needed libraries for the app to run. While K8s is a platform for managing and orchestrating containers and is not limited to Docker.

On K8s version 1.26 the supported container runtimes are:

Architecture

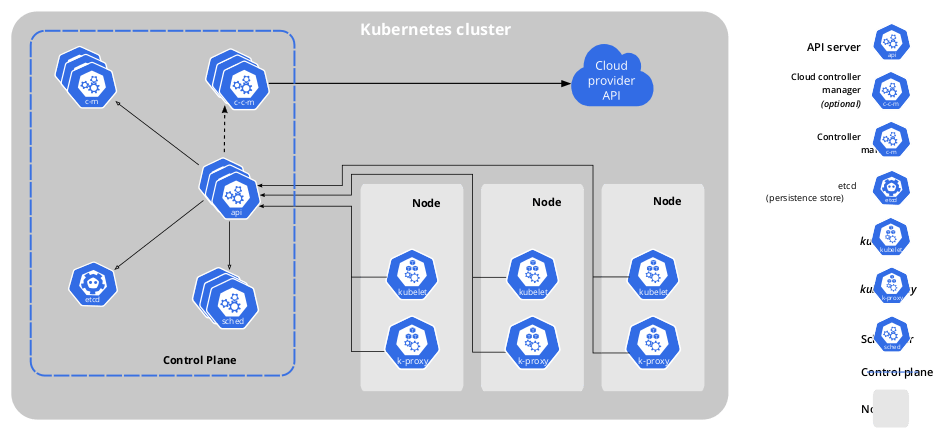

A K8s cluster consists of a set of worker machines called Nodes. The nodes host the Pods. The nodes and the pods are orchestrated by the Control Plane. The communication between the Control Plane and Nodes is done by an API.

Fig. 1 - Architecture of Cloud Controller Manager1

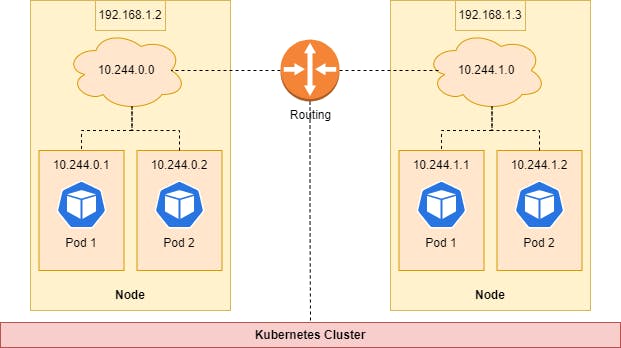

Each node is responsible for managing the network internally making sure that each Pod inside a Node has its unique IP address. All communication between the Pods in different Nodes is done by Routing

Concepts

Workload

A workload is an application running on K8s. A workload can have one or several components working together and all run inside a Pod.

Pod

A Pod is a group of one or more containers, with shared storage and network resources, and a specification for how to run the containers.

Besides application containers, a Pod can also contain init containers that run during Pod startup. You can also inject ephemeral containers for debugging

Workload Resources

Workload Resources configure controllers to ensure the correct Pods are running to match the desired stat that you have defined in your application.

| Workload Resource | Use Cases |

| Deployment | Deployments are used for stateless applications (don't save data). A deployment is a higher abstraction that manages one or more ReplicaSets to provide a controlled rollout of a new version. |

| ReplicaSet | ReplicaSets are managed via declarative statements in a Deployment. A ReplicaSet ensures that a number of Pods is created in a cluster. The pods are called replicas and are the mechanism of availability in Kubernetes. |

| StatefulSet | As the name implies, StatefulSets are often used for stateful applications (save data). StatefulSets can also be used for highly available applications and applications that need multiple pods and server leaders. For example, a highly available RabbitMQ messaging service. |

| DaemonSet | DaemonSets are often used for log collection or node monitoring. For example, the Elasticsearch, Fluentd, and Kibana (EFK) stack can be used for log collection. |

| Job and CronJob | CronJobs and Jobs are used to run pods that only need to run at specific times, such as creating a database backup. |

| Custom Resource | Custom resources are often used for multiple purposes, such as top-level kubectl support or adding Kubernetes libraries and CLIs to create and update new resources. An example of a new resource is a Certificate Manager, which enables HTTPS and TLS support. |

Basics of Networking

Every pod receives its own unique cluster-wide IP address. This gives flexibility for implementing load balancing or any application configuration. K8s imposes the following rules for networking:

pods can communicate with all other pods on any other node without NAT

agents on a nod (systems, daemons, kubelet) can communicate with all pods on all nodes without NAT.

This is solved by using networking.

Fig. 2 - Communication between Pods in different Nodes in the same cluster is done by routing

Every Node in a Cluster has its internal subnet for managing its pods IP addresses. As any pod must be able to communicate with any pod on any node, a routing service is created for communication between nodes.

Services

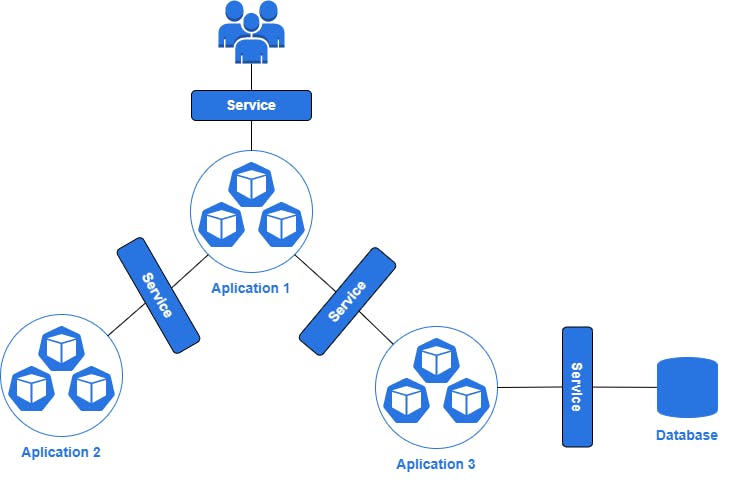

A Service is a method for exposing a network application that is running as one or more Pods in your cluster. It enables communication between the outside world and the pods in the node and between the pods inside the node.

Fig. 3 - Service as a means to establish communication between Pods, Users and Database

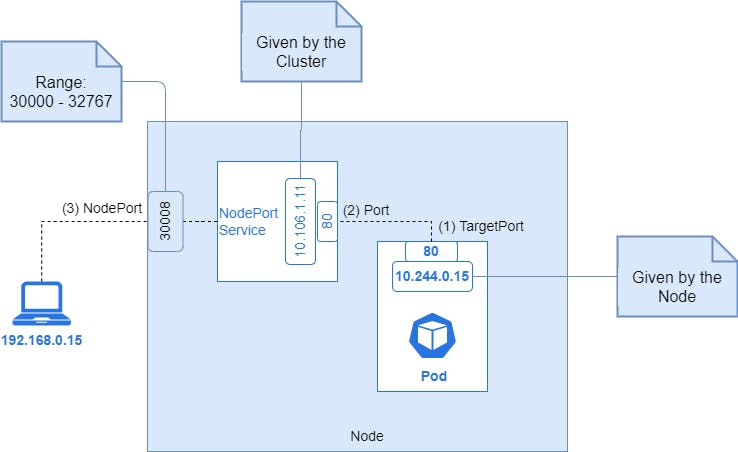

Nodeport Service

A Nodeport builds on top of ClusterIp Service and provides a way to expose the nodes to the outside world.

Fig.4 - Nodeport Service establishes communication between the outside world and a Pod in a Node

In Fig.4 it can be seen a NodePort Service establishing communication between a Pod and a computer on the outside. The details of how it works are out of the scope of this article.

To create a NodePort Service one creates a definition file and apply on the K8s environment.

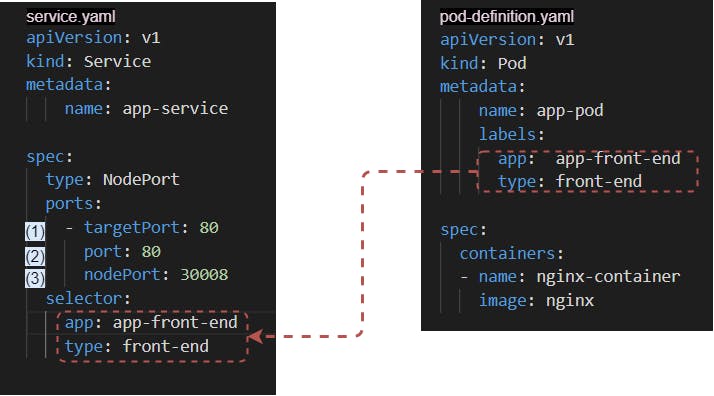

Fig. 5 - NodePort definition file for a Pod called "app-pod"

The numbers in parentheses are the elements defined by the same numbers in Fig. 4

The "service.yaml" file creates the NodePort Service. The spec section is where it is configured the service:

type: the type of Service;

ports: where the service connects, to enable communication;

selector: the labels used to create the Pod in the "pod-definition.yaml";

To apply the definition file just do:kubectl apply -f service.yaml

ClusterIP

As a Pod can be restarted at any time, and a Pod's IP address is dynamically given, this means that the next time the Pod restarts, its IP address will not be the same (is always the next IP address available given by the Node), meaning that the restarted Pod is unreachable by other Pods.

To overcome that, there's the Service ClusterIP.

ClusterIP Service is for Pods that need to communicate with other Pods.

Fig. 6 - Different Pods that communicate usingClusterIP Service

Fig. 6 shows that Frontend Pod uses ClusterIP Backend Service to communicate with Pod Backend, so if for any reason Pod Backend is terminated and restarted, it will get a new IP address but becomes reachable because the service ClusterIP Backend ensures connectivity.

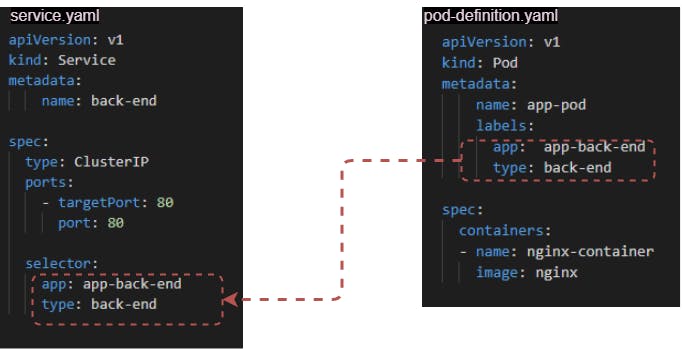

Fig. 7 - Definition file "service.yaml" for aClusterIP Serviceto enable permanent communication to a Pod called "app-back-end"

The "service.yaml" file creates the NodePort Service. The spec section is where it is configured the service:

type: the type of Service;

ports: where the service connects, to enable communication;

selector: the labels used to create the Pod in the "pod-definition.yaml";

To apply the definition file just do kubectl apply -f service.yaml .

Load Balancing

Load Balancing is about distributing requests between servers, making applications more responsive with high-intensity requests (or having many users for simplification).

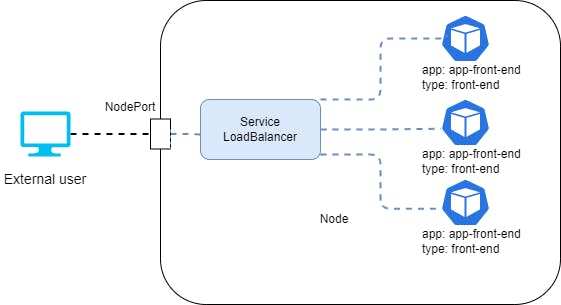

Fig. 8 - A user accessing an application intermediated by a**LoadBalancing Service

This is achieved by having more than one server with the same application. The node will redirect the traffic to the Load Balancer and the Load Balancer redirects the request to any of the servers assigned to it.

The process is invisible to the user/service that is accessing the resources needed. This setup makes it easy to upscale/downscale needed resources dynamically without human intervention.

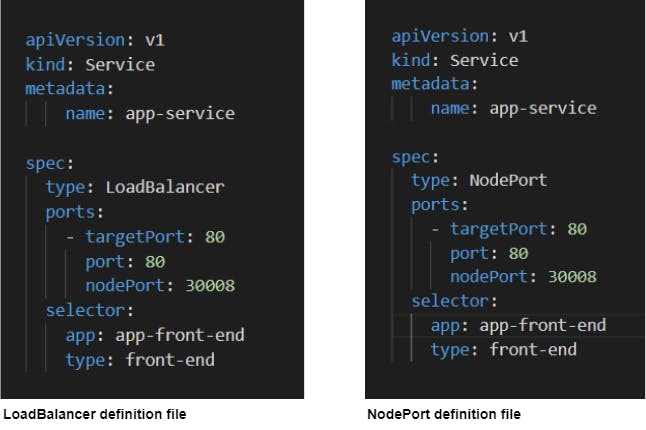

Fig. 9 - LoadBalancer definition file vs. NodePort definition

Figure 9 shows that a LoadBalancer and a NodePort definitions file are practically the same only differing in the type. If there's no Load Balancer provided to K8s, the LoadBalancer definition file will behave like a NodePort. To apply the LoadBalancer definition file just run kubectl apply -f <load balancer file>.

Deployment

When is applied a Pod definition, the Pod will be managed by a Workload Resource called ReplicaSet

K8s Management tools

K8s has some tools for interacting and managing a K8s environment.

kubectl - cli to interact with the K8s cluster. Allows for creating resources, deployments, network services...

kubeadm - for creating K8s clusters

Minikube - developer tool to create a K8s cluster with a single node on a personal computer

Helm - creating a template for a resource to be used in different environments. So the same Helm template can be used in the dev and prod environment

Kompose - helps to translate Docker Compose files into K8s Objects

Kustomize - similar to Helm but with the ability to create re-usable templates for K8s

References

The Kubernetes Authors. (n.d.). Architecture of Cloud Controller Manager. Kubernetes Documentation. Retrieved March 12, 2023, from https://kubernetes.io/docs/concepts/architecture/cloud-controller/#design

NodePort :: The Kubernetes Networking Guide. (n.d.). NodePort :: The Kubernetes Networking Guide. Retrieved March 12, 2023, from tkng.io/services/nodeport

NGINX. (n.d.). What Is Load Balancing? NGINX. Retrieved March 21, 2023, from https://www.nginx.com/resources/glossary/load-balancing/#:~:text=A%20load%20balancer%20acts%20as,overworked%2C%20which%20could%20degrade%20performance.

AVI Networks. (2017). Load Balancing 101 - Learn All About Load Balancers. Avi Networks. Retrieved March 21, 2023, from https://avinetworks.com/what-is-load-balancing/

Kubernetes.io. (n.d.). Service::Type LoadBalancer. Kubernetes. Retrieved March 21, 2023, from https://kubernetes.io/docs/concepts/services-networking/service/#loadbalancer

Deployments (By Kubernetes). (2023b, February 18). Kubernetes. Retrieved March 22, 2023, from https://kubernetes.io/docs/concepts/workloads/controllers/deployment/